How to Train ChatGPT on Your Own Data (2025 Guide)

Discuss with AI

Get instant insights and ask questions about this topic with AI assistants.

💡 Pro tip: All options include context about this blog post. Feel free to modify the prompt to ask more specific questions!

TL;DR: Stop settling for generic AI responses. Whether you want ChatGPT to master your company FAQs, write in your brand voice, or become a customer support expert, training it on your data transforms it from a generalist into your specialized AI assistant. You'll discover four proven methods (from free prompting to advanced fine-tuning) so you can choose what works for your needs and budget.

Your ChatGPT probably gives you impressive but wrong answers about your business.

That's because out of the box, ChatGPT knows nothing about your internal policies, product specifications, or company voice. It was trained on billions of words from the internet, making it a brilliant generalist but a terrible specialist for your specific needs.

Training ChatGPT on your data changes everything.

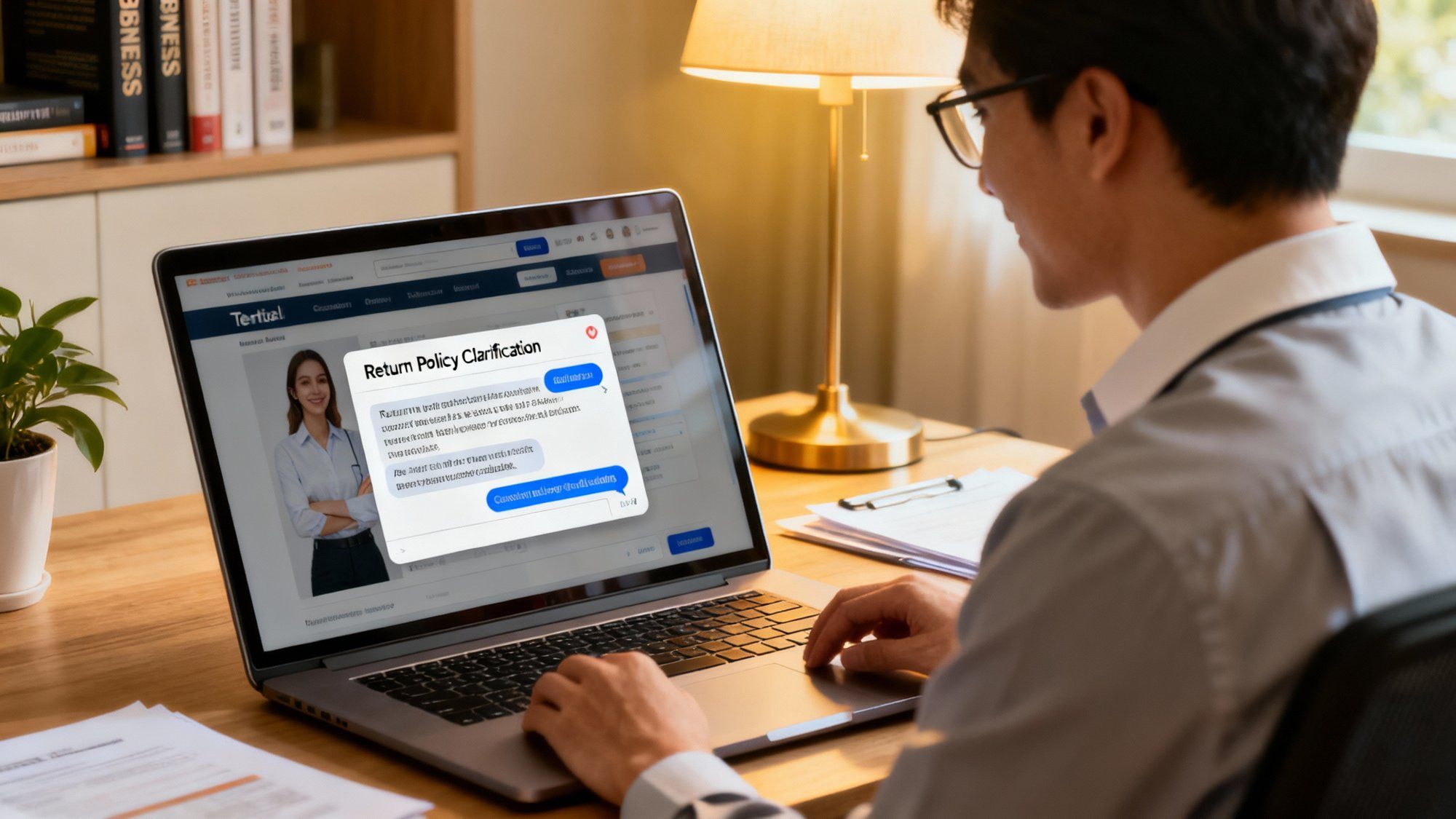

Picture this: a customer asks about your return policy and gets the exact right answer instantly, pulled from your knowledge base. Or an AI assistant that drafts emails in your brand voice without you having to coach it every time. According to OpenAI's research, businesses training ChatGPT see dramatically improved instruction-following and consistent brand tone.

The real-world impact? AI agents trained on knowledge bases can resolve up to 70% of routine customer queries instantly. That frees your team for complex issues that actually need human expertise.

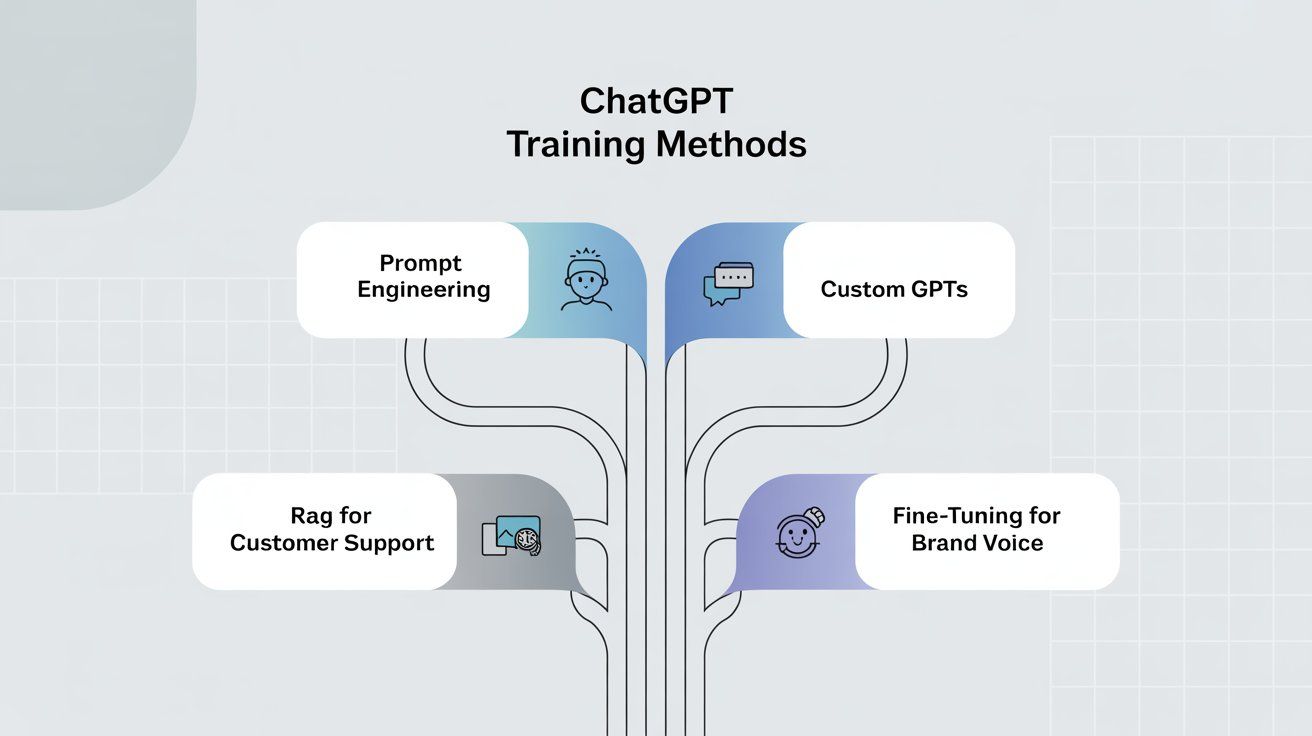

When people say "train ChatGPT," they could mean several different things:

→ Quick customization: Using prompts or Custom GPTs to feed it your information

→ Model fine-tuning: Actually adjusting the AI's weights with your examples

→ Retrieval augmentation: Giving ChatGPT access to search your documents in real-time

Each approach has trade-offs between ease, cost, and power. Good news though: you don't need an AI PhD or massive budget to get started.

So let's break down all four methods. You'll pick the right one (or combination) for your situation.

The simplest way to "train" ChatGPT is just telling it what you want every time you chat.

How it works: You start each conversation by pasting your company info, then ask your question. Something like: "You're a support agent for ACME Corp. Here are our policies: [paste policy text]. Now answer customer questions using only this information."

ChatGPT Plus users can even set custom instructions that persist across chats. No more repeating yourself constantly.

What's great: It's immediate and free. Perfect for quick tests or one-off tasks. You can iterate by tweaking your prompt wording on the fly.

Where it breaks down: Your context has to fit in ChatGPT's prompt window (large but not infinite). Fine for a few pages, but forget about feeding it your entire knowledge base.

Plus, there's no permanent learning. Start a new chat? The AI forgets everything unless you repeat it all again.

Key insight: For customer service scenarios, copy-pasting documents every time gets clunky fast. But prompt engineering's still the perfect starting point to test your ideas before investing in more comprehensive automation solutions.

OpenAI's Custom GPTs feature lets ChatGPT Plus subscribers ($20/month) create personalized bots pre-loaded with their data.

Think of it as your own ChatGPT that already knows your stuff.

The setup process: Go to the GPTs menu in ChatGPT, create a new custom bot, give it a name and instructions, then upload up to 20 files. Your custom GPT uses those documents as its knowledge source for every response.

You can share it with your team via a link. Or keep it private.

Key advantage: Unlike plain prompting, Custom GPTs remember your uploaded data across all sessions. No more re-explaining your business every time.

Perfect for:

• Small businesses with relatively static info (under 20 documents)

• Internal team knowledge sharing

• Testing ideas before building something bigger

• Creating specialized chatbots for specific tasks

The limitations:

• 20-file maximum won't cover enterprise knowledge bases

• Manual updates required when your info changes

• Lives inside ChatGPT's interface only (can't embed on your website)

• If you share publicly, anyone with the link can access it

A startup founder might upload their investor FAQ and blog posts to create a bot answering questions in their voice. But if you need a customer-facing chatbot on your website or integrated with WhatsApp, you'll need different approaches.

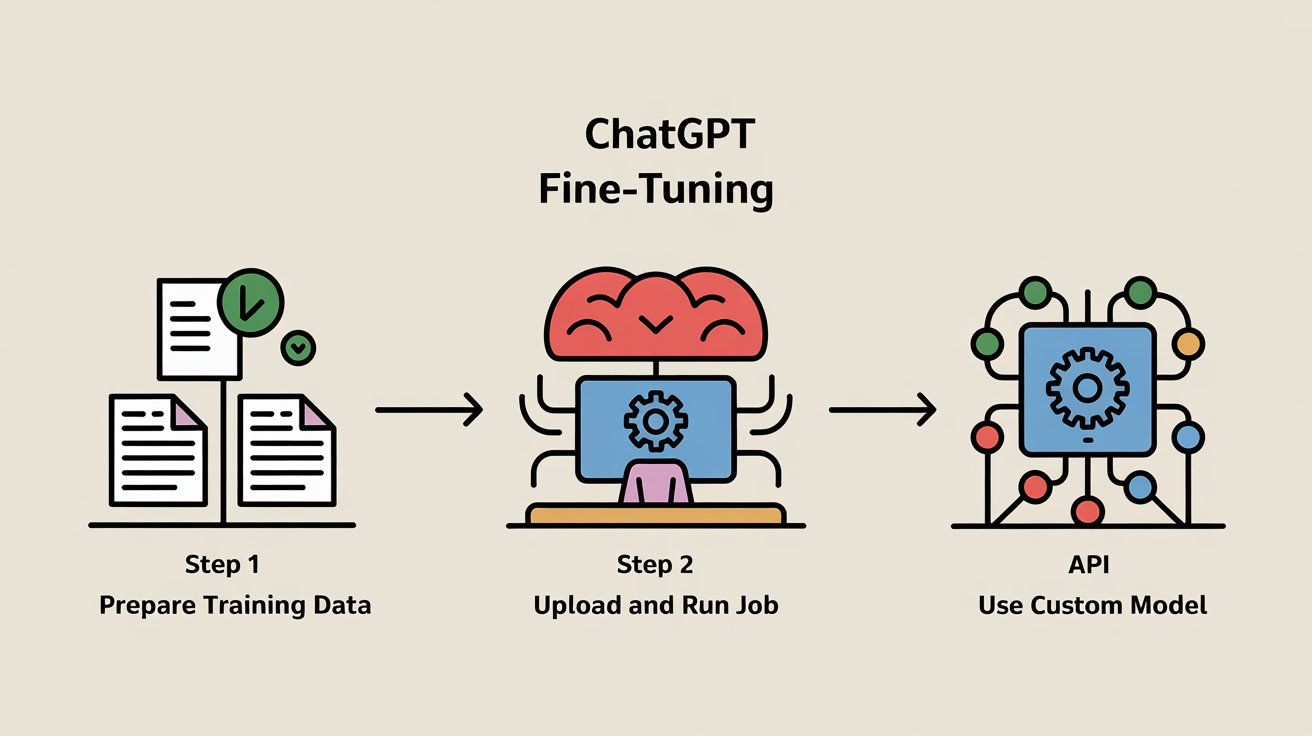

Fine-tuning means taking a base model like GPT-3.5 and retraining it on your specific examples so it adapts to your tasks or domain.

Unlike the previous methods, this actually changes the model's internal weights to better match your desired outputs.

OpenAI's testing found fine-tuned models follow instructions for tone, formatting, and behavior much more consistently.

It's especially powerful for:

→ Maintaining consistent brand voice across all responses

→ Following specific output formats (like always responding in JSON)

→ Learning nuanced patterns from high-quality example datasets

→ Reducing prompt size needs (the model learns instructions internally)

Step 1: Prepare training data

Create a file with prompt-completion pairs. For a support bot, you'd format conversations as user questions with ideal assistant answers. Aim for 50-100+ high-quality examples.

Step 2: Upload and run the job

Use OpenAI's tools to upload your dataset and start fine-tuning. Jobs typically take minutes to a few hours depending on data size.

Step 3: Use your custom model

Once ready, you call the API with your custom model ID. It now behaves according to learned patterns without needing detailed prompts every time.

Fine-tuning is surprisingly affordable for most use cases. Training on 100,000 tokens (roughly 75 pages) for 3 epochs costs about $2.40 according to OpenAI's pricing. Using the resulting model costs slightly more per query than the base model, but you're talking fractions of a penny per response.

Pros | Cons |

|---|---|

Learns your exact patterns permanently | Requires clean, quality dataset preparation |

Consistent voice without coaching | Can still hallucinate beyond training scope |

Private to your organization | Updating requires complete retraining |

Efficient for high-volume repetitive tasks | More technical than plug-and-play options |

Bottom line: Fine-tuning shines when you have well-defined tasks and quality examples. It's perfect for maintaining brand voice or handling specific formats, but it's often combined with other methods for best results.

What's transforming business AI in 2025? Don't change the model. Give it a smart library to search instead.

RAG pairs ChatGPT with a vector database storing your documents. When someone asks a question, the system finds relevant text snippets from your knowledge base and feeds them to ChatGPT as context.

It's like giving the AI an open-book exam instead of expecting it to memorize everything.

Say you have 100 company articles in your knowledge base:

1. All articles get converted into mathematical vectors and indexed in a database

2. Customer asks: "What's the warranty on Product X?"

3. System searches your database for the most relevant content

4. Retrieves matching paragraphs (maybe from your FAQ and product manual)

5. Inserts those snippets into ChatGPT's prompt with instructions to answer based on provided info

6. ChatGPT generates a response grounded in your actual documentation

Nearly eliminates hallucinations: When ChatGPT has direct references to your data, it's much less likely to make stuff up. Properly implemented RAG can reduce AI hallucinations by 80-90% according to industry reports.

Instant updates: Change a policy document? The next customer question immediately uses the new information. No retraining required.

Unlimited scale: You can work with thousands of documents because the model only sees relevant snippets each time.

Full control: The AI can only reference what you provide. Perfect for compliance-sensitive businesses.

Feature | RAG Benefit | Traditional Training |

|---|---|---|

Dataset Size | Handles unlimited documents | Limited by context window |

Update Speed | Instant (just change docs) | Requires full retraining |

Accuracy | 80-90% fewer hallucinations | Prone to making things up |

Cost | Pay per query only | Training + higher API costs |

Compliance | Complete source control | Hard to restrict responses |

The challenge: Setting up RAG from scratch requires technical work: embedding models, vector databases, and retrieval pipelines.

The solution: You don't have to build it yourself. Modern AI platforms handle the complexity behind the scenes.

For example, Spur's platform lets you upload your knowledge base documents, and the system automatically creates a RAG-powered assistant that can deploy across live chat, WhatsApp, Instagram, and Facebook Messenger. You get the accuracy benefits without the technical headaches.

A retail company uses RAG for their customer support bot. When a customer asks "What's the return policy for sale items?", the system pulls the exact policy text and responds:

"According to our policy, sale items can be returned within 30 days of purchase with a receipt. Here's the complete policy: [policy details]"

If the policy changes tomorrow, owners just update the FAQ page. The bot references the new information immediately, with zero additional work.

Compare this to a static bot that might give outdated information unless manually retrained.

RAG clearly wins for accuracy and maintenance.

With four different methods, how do you pick? Consider this practical decision tree:

Your Situation | Best Method | Why It Works |

|---|---|---|

Testing ideas with minimal data | Prompt Engineering | Free, immediate, no commitment |

Small team FAQ (under 20 docs) | Custom GPTs | No-code, persistent memory |

Customer support at scale | RAG | Dynamic updates, high accuracy |

Brand voice consistency | Fine-tuning | Learns your exact style |

Enterprise deployment | RAG + Fine-tuning | Accuracy plus personality |

Start with prompt engineering if you:

→ Just want to test ChatGPT customization

→ Have less than a few pages of information

→ Need results immediately with zero cost

→ Aren't ready to commit resources yet

Example use case: Testing if ChatGPT can help with your customer support emails by including a few policy snippets in prompts.

Try ChatGPT Custom GPTs if you:

→ Have under 20 documents that don't change often

→ Want a no-code solution with persistent memory

→ Can work within the ChatGPT interface

→ Need to share with a small team

Example use case: HR team uploading employee handbook sections for internal Q&A.

Go with RAG when you need:

→ Chatbot integrated with your website or messaging channels

→ Large knowledge base that changes frequently

→ Maximum factual accuracy for customer support

→ Compliance-friendly AI that won't go off-script

Example use case: E-commerce support bot that needs current product info, pricing, and policies across hundreds of SKUs.

Consider fine-tuning if you have:

→ Specific brand voice or tone requirements

→ Structured output format needs

→ High-quality training examples (50+ samples)

→ Tasks requiring absolute consistency

Example use case: Support team with thousands of best-response examples wanting to scale their proven approach.

Many successful businesses combine methods:

• RAG for facts + fine-tuning for voice = Accurate answers in your brand tone

• Custom GPT for testing + RAG for production = Quick validation then scalable solution

• Prompting for edge cases + RAG for main queries = Comprehensive coverage

For businesses serious about AI customer support, the training method matters less than the final experience. Your customers don't care if you used RAG or fine-tuning. They want accurate, helpful responses across all their preferred channels.

This is where Spur excels. Instead of building RAG infrastructure from scratch, you get:

→ Actionable AI agents trained on your knowledge base that don't just answer questions (they can check order status, book appointments, or create support tickets)

→ Multi-channel deployment across WhatsApp, Instagram, Facebook Messenger, and live chat from one unified system

→ No-code setup that gets you from documents to deployed chatbot in hours, not weeks

→ Built-in analytics to see what's working and continuously improve your AI's performance

→ Seamless human handoff when the AI reaches its limits

Unlike basic Q&A bots, Spur's AI agents can take actions based on their training. When a customer asks about their order, the agent doesn't just provide generic shipping info. It can look up their specific order and provide real-time updates.

The competitive edge: While other messaging automation tools struggle with AI training on custom knowledge bases or lack comprehensive multi-channel marketing automation, Spur combines both with a user-friendly approach that doesn't require technical expertise.

It varies dramatically by method:

Method | Time Required | Notes |

|---|---|---|

Prompt engineering | Minutes | Just testing queries in real-time |

Custom GPTs | Minutes | Upload files, then immediate testing |

Fine-tuning | Few hours | End-to-end for training job |

RAG setup | Seconds to hours | For initial document indexing |

Good news: these processes are getting faster with better tools. But don't expect to fine-tune a model 10 minutes before a big demo. Give yourself at least a few hours buffer for anything beyond prompting.

Costs range from free to very reasonable:

• Prompt-only approaches: Free (beyond your time and maybe ChatGPT Plus at $20/month)

• Custom GPTs: Just the ChatGPT Plus subscription

• Fine-tuning: Often under $10 even for sizable datasets

• RAG solutions: Vector database costs (many free tiers) plus API usage

For perspective, most small businesses spend under $100/month total on AI API calls for chatbots handling hundreds of customer questions. The dominant cost is usually the API usage, not the training itself.

Quality beats quantity, but here are practical minimums:

→ Fine-tuning: At least 50+ high-quality examples, ideally hundreds

→ RAG: Even a dozen well-written FAQ pairs can be valuable

→ Style training: A few dozen pages of your writing to capture voice

Pro tip: Start with your core content that answers 80% of common questions. You can always expand from there. Cover the breadth of expected queries rather than going deep on narrow topics.

Partially, yes. Prompt engineering with the free ChatGPT tier is completely free. You can also experiment with open-source RAG tools and free API credits.

But for reliable business deployment, you'll typically need some paid services. Whether that's ChatGPT Plus, API usage, or a managed platform. Good news though: you can validate ideas for free before spending money.

RAG makes this easy: Just add new documents to your knowledge base and they're immediately available for queries. Some platforms auto-sync with Google Drive or Notion.

Fine-tuned models require retraining to include new information. The model's knowledge is static once trained, so updates can be resource-intensive if frequent.

Custom GPTs fall in between: You manually replace uploaded files with newer versions (within the 20-file limit).

Bottom line: For rapidly changing information, RAG wins hands down.

The winning pattern in 2025: Use RAG as your backbone for customer-facing Q&A and support, adding fine-tuning or prompt engineering for brand personality and specialized tasks.

For example, if you're a SaaS company:

• Feed your knowledge base articles via RAG for accurate, current answers

• Use prompting or light fine-tuning for friendly tone matching your support team

• Integrate with Spur's custom AI actions (checking order status, creating tickets, booking demos)

The key is choosing tools that integrate with your business channels (website chat, WhatsApp, Instagram, etc.) rather than creating AI assistants that live in isolation.

Absolutely. You have several options:

• Example-based prompting: Include samples of your writing style in prompts or custom instructions

• Custom GPT with your content: Upload your past writings so the model has style references

• Fine-tuning for style: Train on 100+ examples of your writing to learn word choice, tone, and formatting patterns

• Continuous refinement: Give feedback on responses to improve style alignment over time

With enough quality examples, you can achieve nearly indistinguishable voice matching. Support emails written by a well-trained model can be so on-brand that customers don't realize an AI wrote them.

The trick? Providing varied examples so the model learns your style patterns without overfitting to specific topics or phrases.

Training ChatGPT on your data isn't a distant future concept. It's practical and accessible right now.

Whether you start with simple prompting to test ideas or jump straight into a full RAG setup, the key is matching your approach to your actual needs and resources.

For businesses ready to deploy AI customer support that actually knows their products and speaks their voice, Spur removes the technical barriers while providing the multi-channel reach customers expect.

The question isn't whether you should train ChatGPT on your data.

It's which method will get you there fastest while setting you up for long-term success.

Start small. Test with real queries. Scale what works.

Your customers (and your support team) will thank you for it.